When I found out that the

Computer History Museum has a working IBM 1401 computer

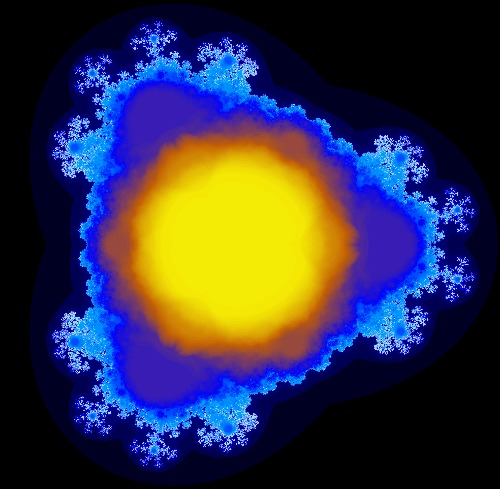

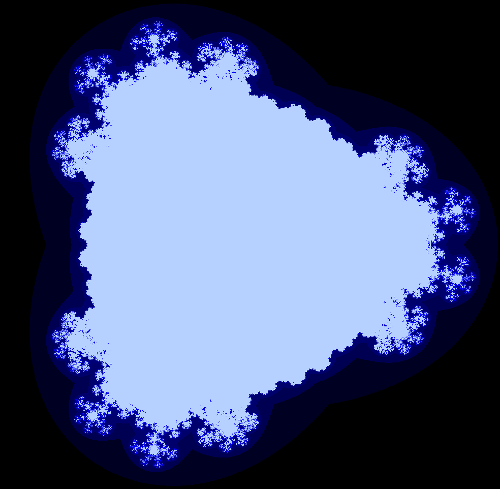

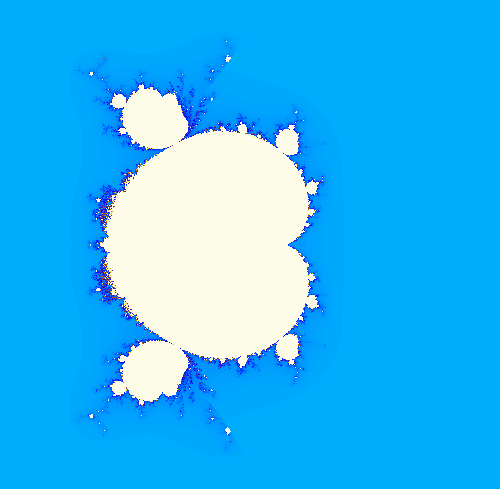

[1], I wondered if it could generate the Mandelbrot fractal.

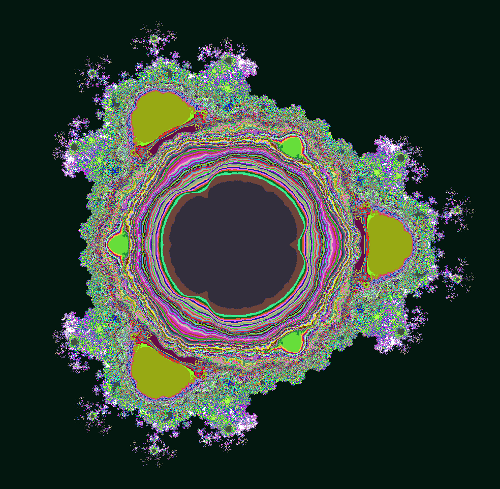

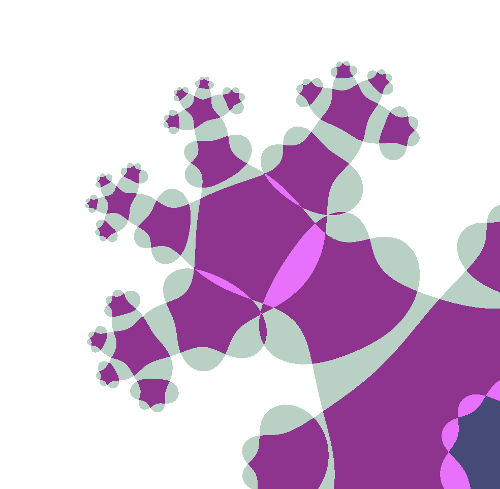

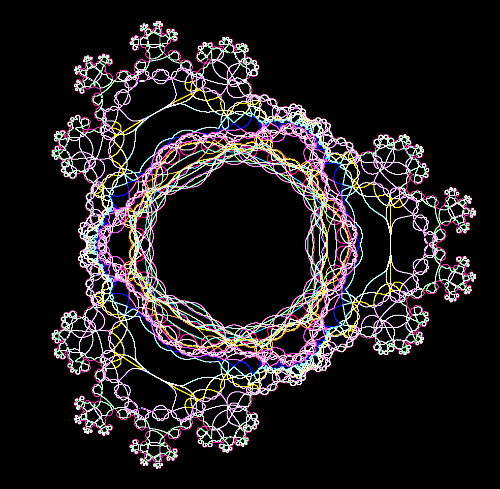

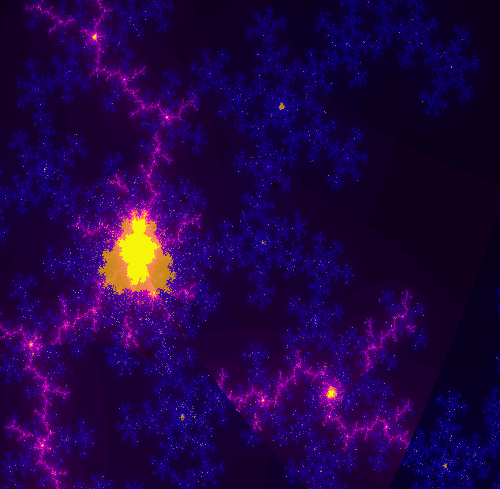

I wrote a fractal program in assembly language and the computer chugged away for 12 minutes to create the Mandelbrot image on its line printer.

In the process I learned a bunch of interesting things about the IBM 1401, which I discuss in this article.

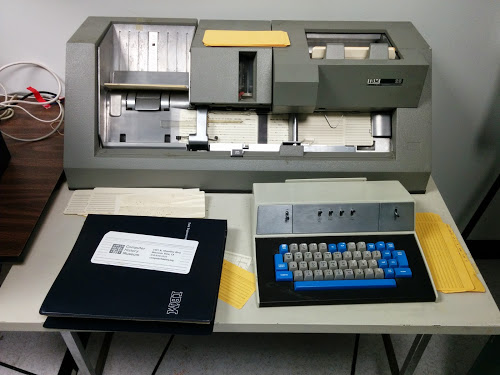

The IBM 1401 mainframe computer (left) at the Computer History Museum printing the Mandelbrot fractal on the 1403 printer (right). Note: this is a line printer, not a dot matrix printer.

The IBM 1401 computer was announced in 1959, and went on to become the best-selling computer of the mid-1960s, with more than 10,000 systems in use.

The 1401 leased for $2500 a month[2] (about $20,000 in current dollars), a low price that let many more companies use computers.

Even a medium-sized business could use the 1401 for payroll, accounting, inventory, order processing, invoicing, analysis, and many other tasks.

The 1401 was called the Model-T of the computer industry due to its low price and great popularity.[3]

Even for its time, IBM 1401 only had moderate performance, especially compared to a high-end business computer like the IBM 7080 (rental fee: $48,000 a month).[2]

But the IBM 1401 became hugely popular because of its affordability, reliability, ease of use, high-quality printer and stylish appearance[4].

The 1401 was an early all-transistorized computer.

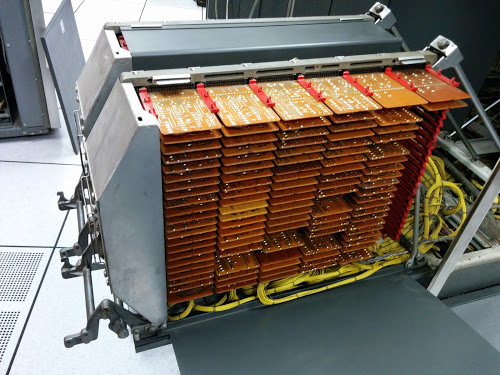

These weren't silicon transistors, though, they were germanium transistors, the technology before silicon. The transistors and other components were mounted on circuit boards about the size of a playing card. These boards were called Standard Modular System (SMS) boards and each one provided a function such as a flip flop or simple logic functions. The IBM 1401 could contain thousands of SMS cards, depending on the features installed - the basic system had about 933 cards[5], while the system I used has 2881 SMS cards.

(For more information on SMS cards, see my earlier article.)

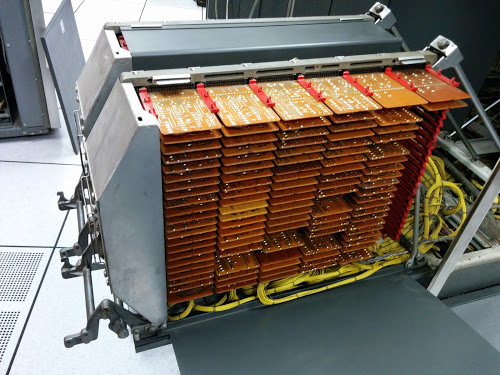

SMS cards inside the IBM 1401. These cards are part of the tape drive control, amplifying signals read from tape.

The SMS cards plug into racks (which IBM confusingly calls "gates"), that fold out from the computer as shown below.

The 1401 is designed for easy maintenance - to access a gate, you just grab the handle and it swings out from the computer, exposing the wires and boards for maintenance. At the bottom of the gate, wiring harnesses connect the gate to other parts of the computer.[6] There are 24 of these gates in total.

The IBM 1401 computer is built from thousands of SMS circuit cards. This open rack (called a gate) shows about 150 SMS cards.

Unusual features of the IBM 1401

It's interesting to look at old computers because they do things very differently. Some of the unusual features of the IBM 1401 are that it used decimal arithmetic and 6-bit characters, it had arbitrary-length words, and additional instructions were available for a rental fee.

The IBM 1401 is based on decimal arithmetic, not binary. Of course it uses 0's and 1's internally, but numbers are stored as digits using binary coded decimal (BCD). The number 123 is stored as three characters: '1', '2', and '3'. If you add 7 and 8, you get the digit 1 and the digit 5. Addresses are in decimal, so storage is in multiples of 1000, not 1024: the system with 16K of memory stores exactly 16,000 characters. All arithmetic is done in base-10. So if you divide two numbers, the IBM 1401 does base-10 long division, in hardware.

The IBM 1401 does not use bytes.[9] Instead, it uses 6-bit BCD storage. Every character is stored as a 4-bit BCD digit with two extra bits called "zone bits", named A and B.[7] The two extra zone bits allow upper-case letters (and a few special symbols) to be stored, as well as digits.[8]

Using a byte as the unit of operation didn't become popular until later with the IBM System/360; in the early 1960s, computers often used strange word sizes such as 13, 17, 19, 22, 26, 33, 37, 41, 45, and 50 bit words.[9]

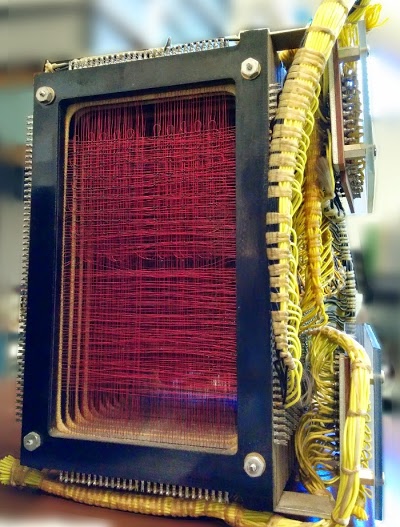

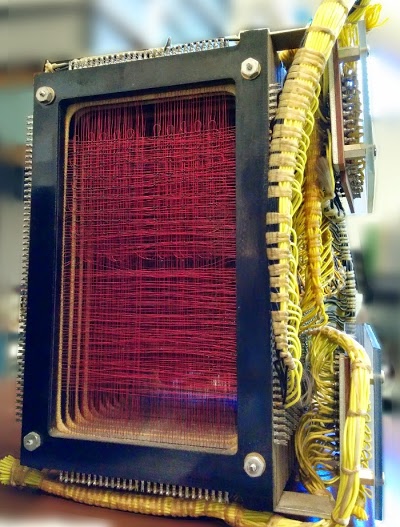

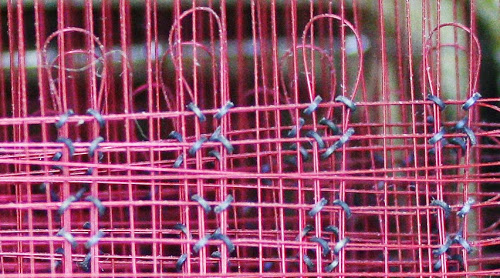

The photo below shows the core memory module from the IBM 1401, with 4,000 characters of memory. Each bit is stored in a tiny donut-shaped ferrite core with wires running through it.

The core module is more complex than you'd expect, with 16 layers (frames) in total. Eight frames hold the 6 bits of data, plus the word mark bits (explained below) and parity bits. Six frames hold data from the card reader brushes and the print hammers, for data and error checking.[10]

The remaining two frames are just used for wiring.

The 4000 character core memory module from the IBM 1401 computer requires a huge amount of wiring.

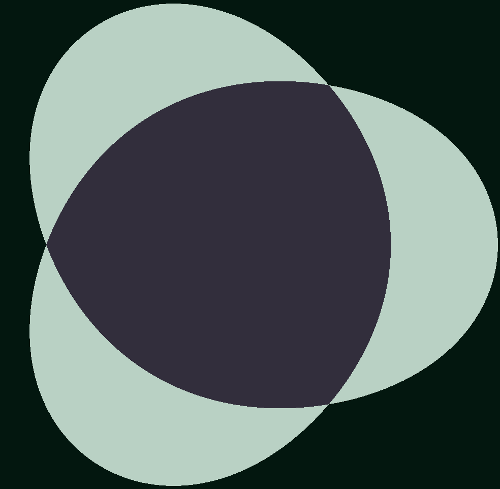

Probably the most unusual feature of the 1401 is that it uses variable-length words, with word marks indicating each word.

You might expect that variable-length words would let you use words of perhaps 8, 16, and 32 bits. But the IBM 1401 permitted words of arbitrarily many characters, up to the total size of memory! For instance, an instruction could move a 47-character string, or add 11-digit numbers. (Personally, I think it's easier to think of it as variable-length fields, rather than variable-length words.)

The word mark itself is a bit that is set on a memory location to indicate the boundary of a word (i.e. field).[11] An instruction on the IBM 1401 processes data through memory sequentially until it hits a word mark. It's important to remember that word marks are not part of the characters, but more like metadata, so they remain as new data records are read in and processed.[11]

The main motivation behind variable-length words was to save expensive core memory, since each field length can be fit to the exact size required.

Another interesting thing about the IBM 1401 is that many instructions were extra-price options.

The "advanced programming" feature provided new instructions for moving records, storing registers, and using index registers; this required the installation of 105 new SMS cards and (coincidentally) cost $105 a month.

Even the comparison instruction cost extra. Because the 1401 uses BCD, you can't just subtract two characters to compare them as you would on most processors. Instead, the 1401 uses a bunch of additional circuitry for comparison, about 37 SMS cards for which you pay $75 a month.[12] Renting the printer buffer feature for $375 a month added a separate core storage module, 267 more SMS cards, and two new instructions.

The bit test instruction cost only $20 a month and additional card punch control instructions were $25 a month.

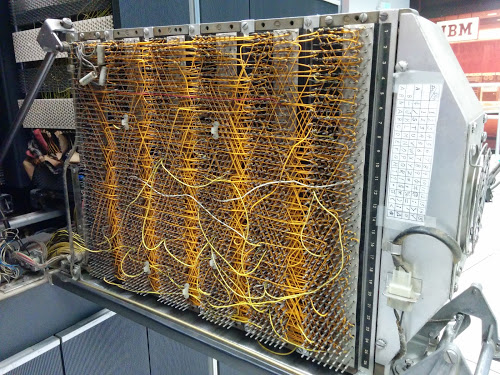

If you bought one of these features, an IBM engineer would install the new cards and move some wires on the backplane to enable the feature. The wire-wrapped backplanes made it relatively easy to update the wiring in the field.

The 1401 could be expanded up to 16,000 characters of core memory storage: 4,000 characters in the 1401 itself, and 12,000 characters in a 1406 expansion box, about the size of a dishwasher. The 12K expansion sold for $55,100 (about $4.60 per character), or rented for $1,575 a month. (You can see why using memory efficiently was important.) Along with the expanded memory came additional instructions to manipulate the larger addresses.

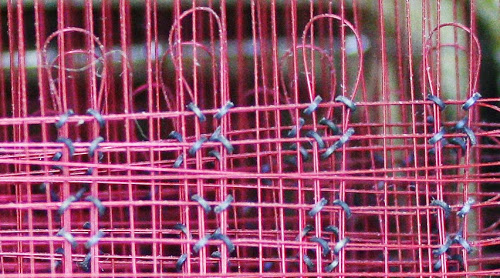

The tiny magnetic cores providing storage inside the IBM 1401's 4,000 character memory. Wires pass through each core to read and write memory. You can see multiple layers of cores in this photo.

One feature that you'd expect a computer to have is a subroutine call instruction and a stack. This is something the 1401 didn't have.

To call a subroutine on the IBM 1401, you jump to the start of the subroutine. The subroutine then stores the return address into a jump instruction at the end, actually modifying the code, so at the end of the subroutine it jumps back to the caller.[13] If you want recursion, you're on your own.

Some advanced features of the 1401

Compared to modern computers, the IBM 1401 is extremely slow and limited. But it's not as primitive as you might expect and it has several surprisingly advanced features.

One complex feature of the IBM 1401 is Editing, which is kind of like printf implemented in hardware. The Edit instruction takes a number such as 00123456789 and a format string.

The computer removes leading zeros and inserts commas as needed, producing an output such as 1,234,567.89.

With the optional Expanded Editing feature (just $20/month more), you can obtain floating asterisks (******1,234.56) or a floating dollar sign ($1,234.56), which is convenient for printing checks.

Keep in mind that this formatting is not done with a subroutine; it is implemented entirely in hardware, with the formatting applied by discrete transistors.

Another advanced feature of the IBM 1401 is extensive checking for errors.

With tens of thousands of components on thousands of boards, many things can go wrong.

The 1401 catches malfunctions so they don't cause a catastrophe (such as printing million dollar payroll checks).

First, the memory, internal data paths, instruction decode, and BCD conversion are all protected by parity and validity checks.

The ALU uses qui-binary addition to detect arithmetic errors.

The card reader reads each card twice and compares the results.[10]

The 1401 verifies the printer's operation on each line. (The read, punch, and print checks use the additional core memory planes discussed earlier.)

As a result, the 1401 turned out to be remarkably reliable.

Because the IBM 1401 has variable word length, it can perform arbitrary-precision arithmetic. For instance, it can multiply or divide thousand-digit numbers with a single instruction. Try doing that on your Intel processor! (I tried multiplying 1000-digit numbers on the 1401; it takes just under a minute.) Hardware multiply/divide is another extra-cost feature; to meet the 1401's price target, they made it an option with the relatively steep price of $325 per month. You do get a lot of circuitry for that price, though - about 246 additional SMS cards installed in two gates.[14]

And remember, this is decimal multiplication and division, which is much more difficult to do in hardware than binary.

The 1401 I used is the Sterling model which it supports arithmetic on pounds/shillings/pence, which is a surprising thing to see implemented in hardware.

(Up until 1971, British currency was expressed in pounds, shillings, and pence, with 12 pence in a shilling and 20 shillings in a pound. This makes even addition complicated, as tourists often discovered.) By supporting currency arithmetic in hardware, the 1401 made code faster and simpler.[15]

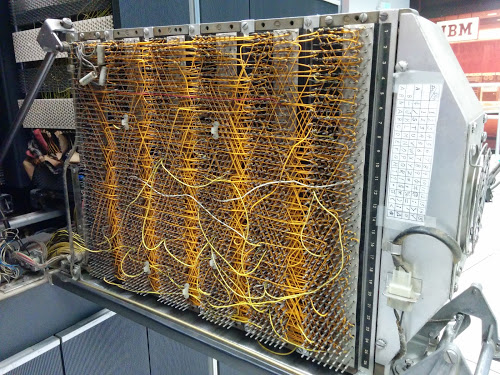

A maze of wire-wrapped wires on the back of a gate connects the circuits of the IBM 1401 computer.

The wiring was installed by automated machinery, but the wiring could be modified by field engineers as needed.

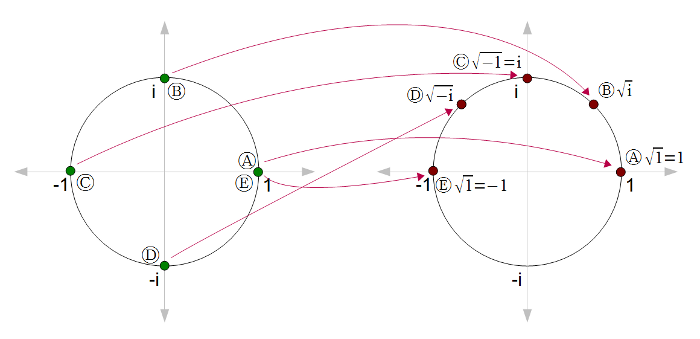

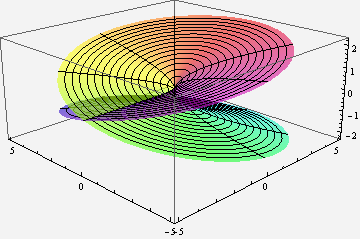

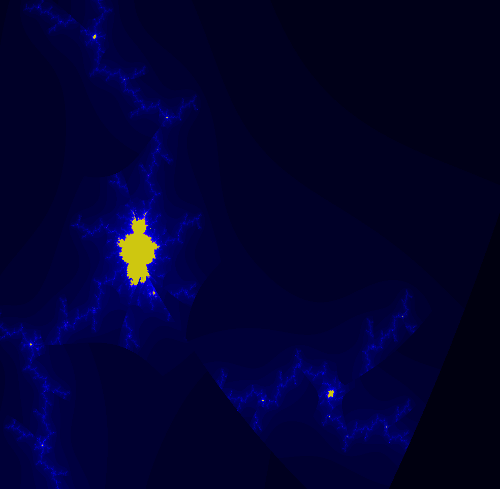

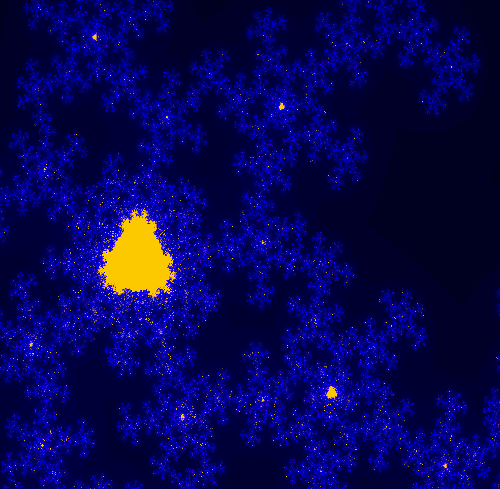

Implementing the Mandelbrot in 1401 assembly language

Writing the Mandelbrot set code on the 1401 is a bit tricky since I did it in assembly language (called

Autocoder). The hardest part was thinking about word marks. Another complication was the 1401 doesn't have native floating point arithmetic, so I used fixed point: I scaled each number by 10000, so I could represent 4 decimal places with an integer. The 1401 is designed for business applications, not scientific applications, so it's not well-suited for fractal generation. But it still got the job done.

The 1401 didn't need to be programmed in assembly language -

it supports languages such as Fortran and COBOL -

but I wanted the full 1401 experience. It does amaze me though that you can run a COBOL compiler on a machine with just 4,000 characters of memory. The Fortran compiler required a machine with 8,000 memory location; in order to fit, it ran in 63 separate phases.

The assembly language code for the Mandelbrot fractal is shown below.

The first part of the code defines constants and variables with DCW.

This is followed by three nested loops to loop over each row, each column, and the iterations for each pixel.

Some of the instructions in the code are M (multiply), A (add), S (subtract), and C (compare). Comments start with asterisks.

JOB MANDELBROT

*GENERATES A MANDELBROT SET ON THE 1401

*KEN SHIRRIFF HTTP://RIGHTO.COM

CTL 6641

ORG 087

X1 DCW 001 *INDEX 1, COL COUNTER TO STORE PIXEL ON LINE

ORG 333

*

*VALUES ARE FIXED POINT, I.E. SCALED BY 10000

*Y RANGE (-1, 1). 60 LINES YIELDS INC OF 2/60*10000

*

YINC DCW 333

XINC DCW 220 *STEP X BY .0220

*

*Y START IS -1, MOVED TO -333*30 FOR SYMMETRY

*

Y0 DCW -09990 *PIXEL Y COORDINATE

*

*X START IS -2.5

*

X0INIT DCW -22000 *LEFT HAND X COORDINATE

X0 DCW 00000 *PIXEL X COORDINATE

ONE DCW 001

ZR DCW 00000 *REAL PART OF Z

ZI DCW 00000 *IMAGINARY PART OF Z

ZR2 DCW 00000000000 *ZR^2

ZI2 DCW 00000000000 *ZI^2

ZRZI DCW 00000000000 *2 *ZR *ZI

ZMAG DCW 00000000000 *MAGNITUDE OF Z: ZR^2 + ZI^2

TOOBIG DCW 00400000000 *4 (SCALED BY 10000 TWICE)

I DCW 00 *ITERATION LOOP COUNTER

ROW DCW 01

ROWS DCW 60

COLS DCW 132

MAX DCW 24 *MAXIMUM NUMBER OF ITERATIONS

*

*ROW LOOP

*X1 = 1 (COLUMN INDEX)

*X0 = -2.2 (X COORDINATE)

*

START LCA ONE, X1 *ROW LOOP: INIT COL COUNT

LCA X0INIT, X0 *X0 = X0INIT

CS 332 *CLEAR PRINT LINE

CS *CHAIN INSTRUCTION

*

*COLUMN LOOP

*

COLLP LCA @00@, I *I = 0

MCW X0, ZR *ZR = X0

MCW Y0, ZI *ZI = Y0

*

*INNER LOOP:

*ZR2 = ZR^2

*ZI2 = ZI^2

*IF ZR2+ZI2 > 4: BREAK

*ZI = 2*ZR*ZI + Y0

*ZR = ZR2 - ZI2 + X0

*

INLP MCW ZR, ZR2-6 *ZR2 = ZR

M ZR, ZR2 *ZR2 *= ZR

MCW ZI, ZI2-6 *ZI2 = ZI

M ZI, ZI2 *ZI2 *= ZI

MCW ZR2, ZMAG *ZMAG = ZR^2

A ZI2, ZMAG *ZMAG += ZI^2

C TOOBIG, ZMAG *IF ZMAZ > 4: BREAK

BH BREAK

MCW ZI, ZRZI-6 *ZRZI = ZI

M ZR, ZRZI *ZRZI = ZI*ZR

A ZRZI, ZRZI *ZRZI = 2*ZI*ZR

MCW ZRZI-4, ZI *ZI = ZRZI (/10000)

MZ ZRZI, ZI *TRANSFER SIGN

A Y0, ZI *ZI += Y0

S ZI2, ZR2 *ZR2 -= ZI2

MCW ZR2-4, ZR *ZR = ZR2 (/10000)

MZ ZR2, ZR *TRANSFER SIGN

A X0, ZR *ZR += X0

*

*IF I++ != MAX: GOTO INLP

*

A ONE, I *I++

C MAX, I *IF I != MAX THEN LOOP

BU INLP

MCW @X@, 200&X1 *STORE AN X INTO THE PRINT LINE

BREAK C X1, COLS *COL LOOP CONDITION

A ONE, X1

A XINC, X0 *X0 += 0.0227

BU COLLP

W *WRITE LINE

*

*Y0 += YINC

*IF ROW++ != ROWS: GOTO ROWLP

*

C ROW, ROWS *ROW LOOP CONDITION

A ONE, ROW

A YINC, Y0 *Y0 += 0.0333

BU START

FINIS H FINIS HALT LOOP

END START

I compiled and ran the code with the ROPE compiler and simulator before using the real computer.[16]

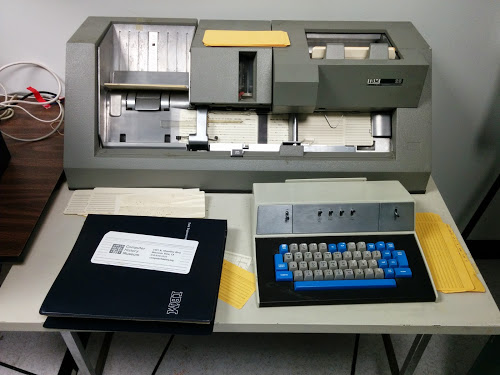

The cards were punched automatically by an IBM 029 keypunch controlled by a PC through a bunch of USB-controlled relays. The photo below shows the keypunch in operation. Each blank card drops down from the feeder in the upper right. The card is punched as it moves to the left. Punched cards are then flipped up and stacked in the upper left area (empty in this picture).

An IBM 029 keypunch preparing a card deck that generates the Mandelbrot fractal.

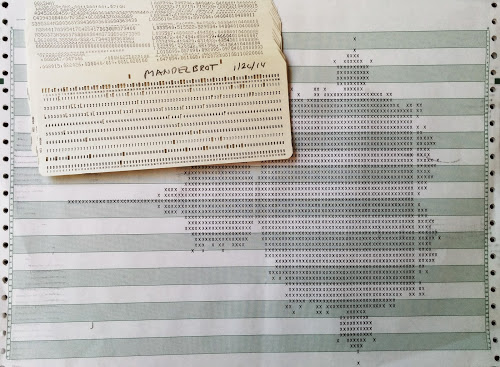

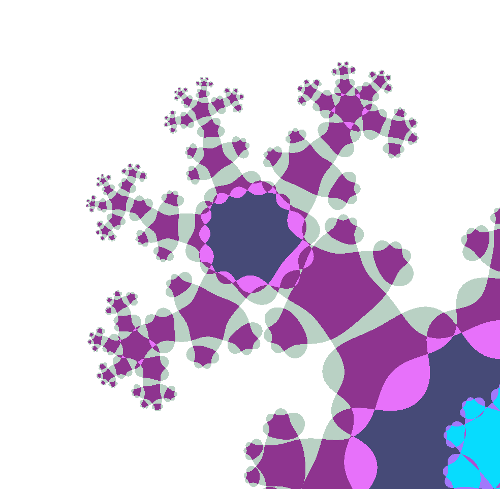

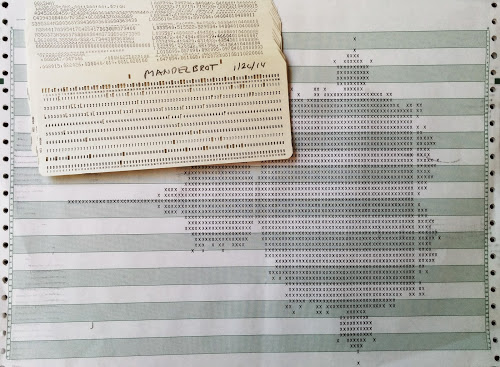

The resulting card deck is shown below, along with the output of execution. The program fits onto just 16 cards, but the card format is a bit unusual.

The machine code for the Mandelbrot program is punched into the left half of the each card, with code such as M384417A395417.

An interesting thing about the 1401 is the machine code is almost human-readable.

M384417 means Move field from address 384 to address 417. A395417 means Add the number at address 395 to the number at address 417.

The text on these cards is the actual machine code that gets executed, not the assembly code. Since the machine is character-based, not binary, there's no difference between the characters "428" and the address 428.

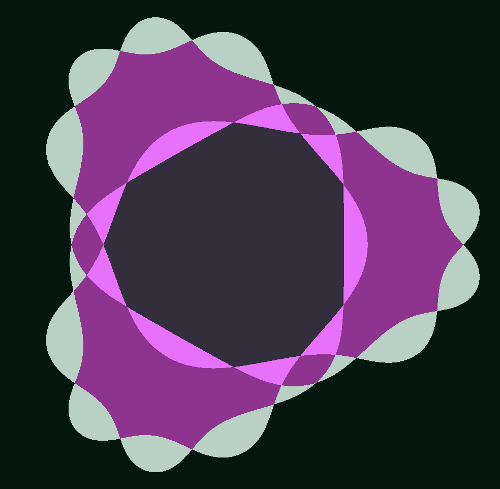

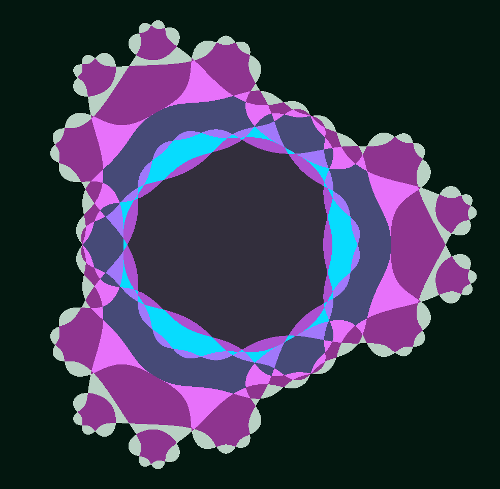

The card deck to generate the Mandelbrot fractal on the IBM 1401 computer, along with the output.

The white stripe through the fractal near the right is where a hammer in the printer malfunctioned.

If you look at the right half of the cards, there's something totally different going on, with text like L033540,515522,5259534. There's no operating system, so, incredibly, each card has code to copy its contents into the right place in memory (L instruction), add the word marks (, instruction), and load the next card. In other words, the right hand side of each card is a program that runs card-by-card to load into memory the program on the left hand side of the card deck, which is executed after the last card is loaded.[17]

To run the program, first you hit the "Power On" button on the IBM 1401 console. Relays clunk for a moment to power up the system and then the computer is ready to go (unlike modern computers that take so long to boot). You put the cards into the card reader and hit the "Load" button. The cards fly through the reader at the remarkable speed of 800 cards per minute so the Mandelbrot program loads in just over a second.

The console starts flickering as the program runs, and every few seconds the line printer hammers out another line of the fractal. After 12 minutes of execution, the fractal is done.

(Interestingly enough, the very first picture of a Mandelbrot set was printed on a line printer in 1978.[18])

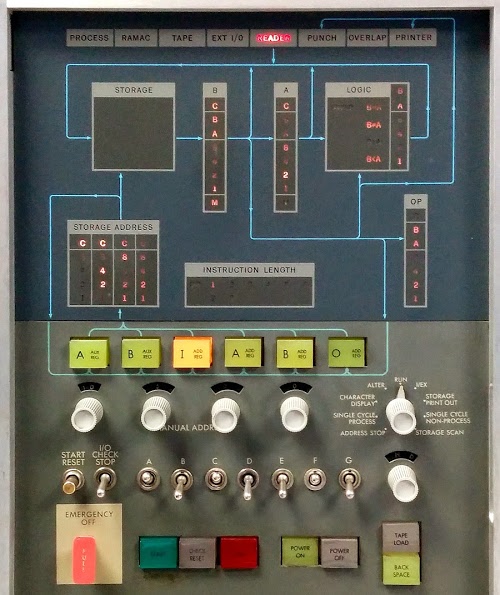

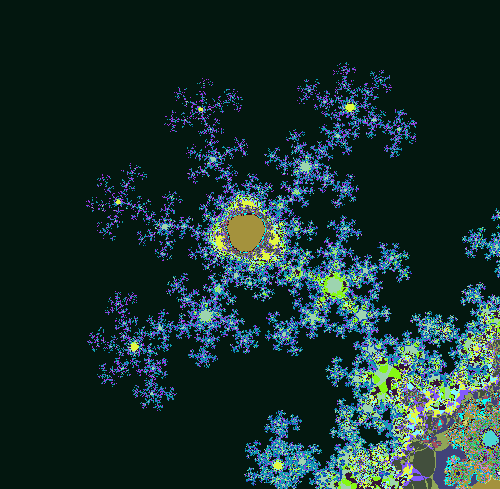

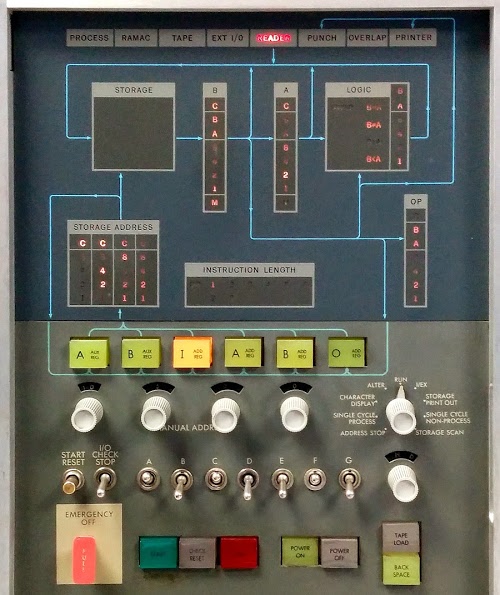

The console of the IBM 1401 mainframe. The top half shows the data flow through the computer, from storage to the B and A registers and the logic unit. Each 6-bit value is displayed as 1248ABC, where A and B are zone bits and C is the check (parity) bit. On the right, "OP" shows the operation being executed. Below are knobs to manually access memory. On the left, the "Start Reset" button clears an error, such as the card read failures I would hit. At the bottom are the important buttons to turn the computer on and off. Note the Emergency Off handle that immediately cuts the power.

Conclusions

Writing a Mandelbrot program for the IBM 1401 was an interesting project. You think a bit differently about programming when using decimal numbers and keeping track of word marks. But I have to say that comparing the performance with a current machine - not to mention the storage capacity - makes me appreciate Moore's Law.

The Computer History Museum in Mountain View runs demonstrations of the IBM 1401 on Wednesdays and Saturdays. It's amazing that the restoration team was able to get this piece of history working, so if you're in the area you should definitely check it out. The schedule is here. Tell the guys running the demo that you heard about it from me and maybe they'll run my prime number program or Pi program. You probably wouldn't want to wait for the Mandelbrot to run.

Thanks to the Computer History Museum and the members of the 1401 restoration team, Robert Garner, Ed Thelen, Van Snyder, and especially Stan Paddock. The 1401 team's website (ibm-1401.info) has a ton of interesting information about the 1401 and its restoration.

Notes and references

[1]

The Computer History Museum has two working 1401 computers: the "German 1401" and the "Connecticut 1401" (based on where they came from). I used the German 1401 since the Connecticut 1401 was undergoing card reader maintenance at the time.

[2]

While the $2500 per month rental rate is quoted in many places, the price could climb to $10,000 a month for a full system with multiple tape drives. The price varied greatly depending on the 1401 model, the amount of memory, and the peripherals (tape drives, card reader, printer, disk drive). The minimal configuration (1401 Model A, 1402 card reader, and 1403 printer) went for $2,475 a month (or purchased for $125,600 - about $1 million accounting for inflation). A "recommended" configuration with 8K of memory, processor options, and another printer went for $4,610 a month. Tape drives boosted the price at $980 a month for the interface and $1100 a month for each 729 IV tape drive. A 4000 character memory expansion cost $575 per month.

Detailed information on 1961 computers including rental rates is available in

an interesting survey of computers in 1961, the thousand-page A Third Survey of Domestic Electronic Digital Computing Systems", Report No. 1115, March 1961

(1401 page).

The basic IBM rental price was for one 8-hour shift (176 hours a month). The computers included a time counter, and users were billed extra if they went over the allotted time. Customers often paid a higher rental fee so they could run 24/7.

[3]

The comment that the 1401 became the Model-T of the computer industry is from the article IBM System/360, by IBM VP Bob Evans. One piece of trivia from that article is the IBM 1620 rented for $1600 a month, making it the first IBM system renting for a price less than its model number.

[4]

The IBM 1401 has a very distinctive style, especially compared to earlier IBM computers (such as the 650 or 704) with a very utilitarian, industrial appearance.

The sleek, modernist style of the 1401 isn't arbitrary, but the result of a detailed industrial design process.

The book

The Interface: IBM and the Transformation of Corporate Design has a very interesting discussion of the effort IBM put into industrial design. Edgar Kaufmann, Jr came up with important design ideas that were developed by Eliot Noyes.

Some design concepts were recessed pedestals for a feeling of floating and lightness, the concealment of most of the circuitry, expressing the "inherent drama" of computers, the carefully controlled color scheme, and modern materials for the cabinets.

The tape drives in particular were wildly successful at expressing the "inherent drama" of computing, to the point that spinning tape drives became a movie cliche (tvtropes: Computer Equals Tapedrive).

[5]

The number of SMS cards in an IBM 1401 depends on the model, the options installed, engineering changes (i.e. fixes) applied to the system, and the amount of memory in use. I got the number 1206 by analyzing the SMS plug chart and counting 933 basic cards, 267 Sterling basic cards, 6 power supply cards, and 11 cards for storage support.

This machine is the Sterling model, so it is slightly more complex than the regular model.

[6]

The IBM 1401 has 32 "potential" gates: 16 on the front and another 16 on the back, but only 24 of these are gates with circuitry.

The two potential panels in the upper left are taken up by the control panel, which swings out to reveal the core memory behind it.

Four panels have power supplies behind them (although much of the power supply is inside the card reader, strangely). Two more spots are occupied by the surprisingly thick cables connecting the 1401 to peripherals. This leaves 24 swing-out gates; some may be unused, depending on the optional features installed.

[7]

The zone bits are closely related to the zone punches in IBM punch cards. The top row of a punch card is the 12 (Y) zone, and the row beneath it is the 11 (X) zone. A number has one hole punched in the card row corresponding to the number (rows 0 through 9). A character usually has two holes punched: 1 through 9 for the BCD value, and a zone punch for the zone bits. The zone punch is card zone 11 for zone bit B set, card zone 12 for zone bits A and B, or card row 0 for zone bit A.

There are a few complications, though, that mess up this pattern.

First, for characters outside the 0-9 range, two digit punches are used: 8 and the digit for the low three bits. (e.g. '#' is stored as bits 8, 2, and 1, so it is punched as 8 and 3.)

Second, because card row 0 is used both for the digit 0 and as a zone punch, there is a conflict and the value 0 is treated as 10 in certain conditions (and punched as 8 and 2).

Because a blank has no punches and is stored as 0 internally, the digit 0 is stored as 10.

Different IBM systems treat these corner cases differently. Custom features were available for the 1401 to provide compatibility as needed.

[8]

The zone bits are used for a few things in addition to letters. A zone bit is added to the low-order digit of a number to indicate the sign of the number. Memory addresses are expressed as three digits, which would allow access to 1000 locations; by using zone bits, the three digit address can reach 16,000 locations. The zone bits also track overflow in arithmetic operations.

[9]

Originally byte referred to the group of bits used to encode a character, even if it wasn't 8 bits (see Planning a Computer System: Project Stretch, p40).

Some examples of unusual word lengths:

The RCA 601 supported 6, 8, 12, 16-bit, or variable-length words.

SPEC used 13-bit words.

The Hughes Airborne Computer used 17-bit words, while the Hughes D Pat used 19-bit words and the Hughes M 252 used 20-bit words.

The RW 300 used 18-bit words, while the RW 400 used 26-bit words.

The Packard Cell 250 used 22-bit words.

UNIVAC 1101 used 24-bit words.

ALWAC II used 32 bits plus sign (33 bits).

COMPAC used 37 bits (36 + sign).

AN/MJQ used 41-bit words.

SEAC used 45 bits (44 plus sign).

AN/FSQ 31 used 48 bit words.

ORACLE used 50-bit words.

The Rice University computer used 54-bit words.

Details on these computers are in A Third Survey of Domestic Electronic Digital Computing Systems.

[10]

The card reader reads each card twice and verifies that the hole count is the same for both reads. If the counts don't match, the card reader detects the error and stops. In more detail, each card is read "sideways", a row of 80 positions at a time. Two bits keep the status of each column. One bit is turned on if there is any hole. The other bit is toggled for each hole. (Thus, it's not exactly a count, simplifying the logic.) The process is reversed on the second read, so both bits will end up back at 0 for a correct read.

Since the next card is already getting read as the first card is getting verified, two sets of bits are needed, one for the first card and one for the second card. Thus, four planes of 80 bits each are used in total to verify card reads.

Each of the 240 brushes in the card reader has a separate wire that goes through a specific core in the 1401's core memory.

Likewise, each of the 132 print hammers in the printer is wired directly to an individual core.

Thus, there are thick cables containing hundreds of wires between the IBM 1401 and the card reader and the printer.

[11]

There are several details of wordmarks I'll point out. The IBM 1401 is obviously big-endian, since that's how numbers are punched on cards.

Since arithmetic operations need to start with the lowest-order digit, they start at the "end" of the number and work backwards through memory to the highest-order digit.

The consequence is an instruction is given the address of the end of the field and progresses to lower addresses until it hits the word mark, which is at the beginning of the field. This seems backwards if you're a C programmer, where you start at the beginning of a string and go forwards until you hit the end.

Word marks are also used to indicate the start of each instruction. Instructions can be 1 to 8 characters long, and the presence of a word mark controls the length. Bootstrapping the word marks for the first instructions loaded into the computer requires some tricks.

[12]

The comparison logic is more complex than you'd expect. Surprisingly, the comparison order doesn't match the binary order of characters. Also, comparisons aren't implemented with subtraction (like most processors). Instead, logic first determines if the characters are special characters or not - special characters are before regular characters (with some exceptions: for example, - is between I and J). Then a lot of AND-OR logic performs basically brute-force comparison by looking at various bit patterns. The results of a comparison can be seen on the control panel in the Logic box.

The optional compare logic is shown on the Intermediate Level Diagrams (ILD), page 37.

[13]

Self-modifying code, where the program changes its own instructions, was common in the past. A guide to IBM 1401 programming, 1961, has a whole chapter (6) on this, discussing how "we are able to operate on instruction in storage just as through they were data". Treating code as data wasn't done only by Lisp programmers.

In fact, the book calls the ability of a program to modify itself "by all odds the most important single feature of the stored program concept." As well as subroutine returns, IBM 1401 programmers used self-modifying code for indexing, address computation, and complex conditional branching.

On current machines, Self-modifying code is rare because it's harder to debug and messes up the instruction pipeline.

[14]

For details on how the multiply and divide operations work internally, see the optional feature manual. This circuit has some complicated optimizations. For example, to speed up the repeated additions, it will add the doubled value instead if appropriate. But doubling a decimal value takes a fairly complicated circuit (unlike binary doubling, which is trivial). And there's error checking to make sure nothing goes wrong in the doubling.

[15]

The Sterling circuitry to support £sd math is even more complicated because shillings and pence are stored in a compressed form. The obvious representation is a two-digit field for pence (0 to 11) and a two-digit field for shillings (0 to 19). But to save precious memory and storage space, the BSI standard and incompatible IBM standard use one-digit fields and special characters. A knob on the control panel selects which standard to use. The Sterling hardware must perform arithmetic on this compressed representation, as well as handling the non-decimal bases of shillings and pence.

This knob on the control panel of the IBM 1401 computer selects the storage mode for pence and shillings.

[16]

If you want to write a program for the 1401, instructions on using the ROPE simulator are here. It's a simple IDE that lets you edit assembly code (which is called Autocoder), assemble it, and then run it on the simulator.

Take a look at A guide to IBM 1401 Programming and Programming the 1401 if you want to understand how to program the 1401. The 1401 Reference Manual is also useful for understanding what the instructions do.

[17]

Each card also has a four-digit sequence number in the last columns.

This lets you re-sort the cards if you happen to drop the deck and scramble the program.

[18]

The first picture of the Mandelbrot set appears in 1978 paper by Brooks and Matelski, prior to Mandelbrot's work. (Thanks to Robert Garner for pointing this out.)

There's some controversy over who "really" discovered the Mandelbrot set. See

Who Discovered the Mandelbrot Set? in Scientific American for a discussion.